Hi @zynthianers!

I am getting back to the idea of providing my updated V 5.1 with an SSD drive.

I plan to make use of the bespoke @riban’s script for copying Oram directly to nvme, evoking it in /root with:

$ bash zynthian_sd2nvme.sh

I have a few questions:

1] @riban, should I mount the nvme drive in the system beforehand?

I surmise yes, but the suggested sequence of commands as per the Geekpi Hat manual seems a bit redundant, for Zynthian/Oram.

Could you maybe suggest a minimal procedure, just on order to run your script thereafter?

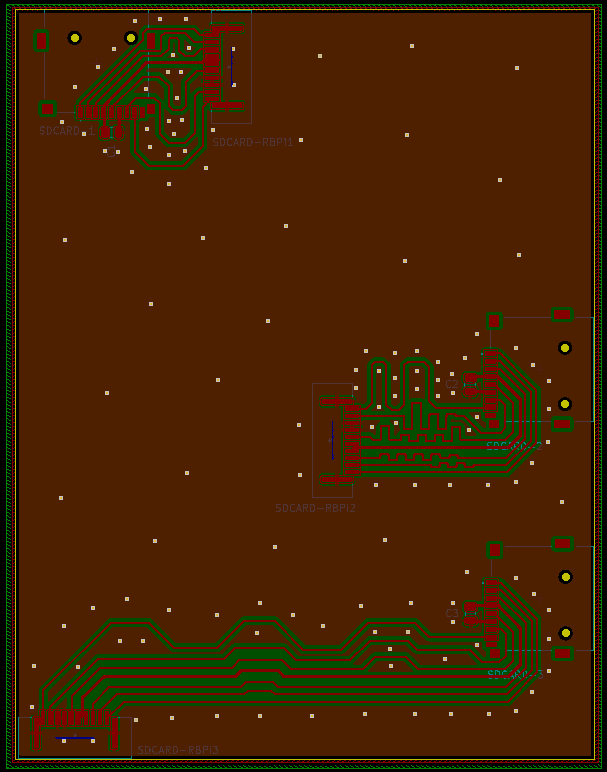

2] As I somehow suspected, I should have installed the nvme hat and drive while upgrading tha case to V 5.1. As it stands now, the PCI Express connector remains tightly secluded in an isle, between the thermal block and the main board. It turns out to be very difficult to reach the flat cable’s lock of the PCIe port, with a plier or a small screwdriver, due to a raised edge of the bottom case. Moreover, sliding the flat cable in the connector in such a crammed space seems to border on the impossible. I am quite reluctant to remove either the main board from the bottom case or the Pi 5 from the thermal block, thus attempting to get hold of the SBC, because either way I would break the thermal tape padding, whose careful placement is one of the least convenient parts of the Zynthian kit building. Any suggestions @jofemodo as how to proceed?

3] Providing that I manage to get beyond the previous step, what solution should I adopt to secure the nvme hat in place? As @jofemodo promptly pointed out, its 2.25 mm width fits almost exactly between the V5 mainboard and the border of the metal enclosure, and a 5x60 mm 16 pin 100 Ohm flat cable from AliExpress could join the hat and the PCIe connector.

I can foresee two ways:

1- Drilling two holes in the bottom case, in order to screw two brass spacers in the related holes of the hat, thus holding the whole package in place.

2 - Using adhesive tape to stick the hat and drive to the bottom of the case.

Solution 1 would be neater, more durable and more reversible, from an assembling standpoint, but would lose the thermal dissipation contact with the enclosure.

Solution 2 would be relatively easier to implement, but a bit makeshift and more hardy modifiable in the future, and would grant full thermal contact with the bottom case.

Any advice on that?

Thanks,

All best regards

![]()

![]()

![]()

![]()