Hello everyone!

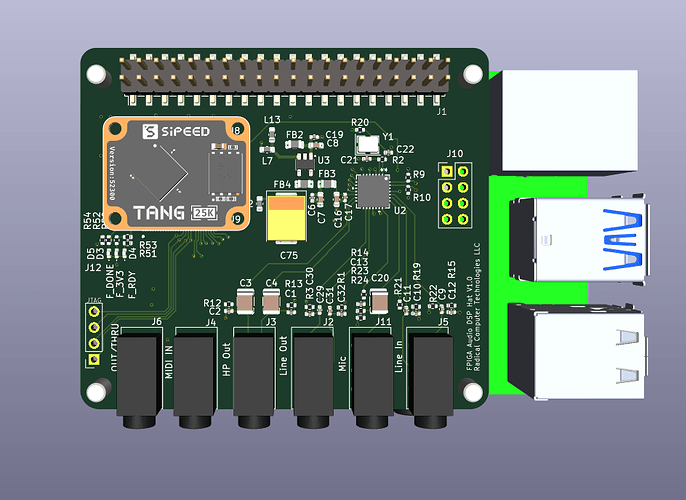

My team has recently been working on a very neat Audio DSP Hat for Raspberry Pi platforms that mates a Raspberry Pi with an FPGA and Audio Codec to allow for accelerated audio processing + performance.

As a brief overview -

The RCT FPiGA Audio hat seats a FPGA between the Raspberry Pi’s I2S lines and an AD SSM2603 audio codec to allow for various audio configurations.

To maximize capabilities and performance, the Pi is set as an I2S slave device at 384 KHz audio rate in and out to the FPGA. The SSM2603 typically is set to 48kSample and derives all clocks for this rate off of an on board crystal. The FPGA also receives this crystal’s signal and generates 384k I2S samples for the Pi.

This is so that between the FPGA and the Pi, there can be the opportunity to have 16 mono channels at 48KSample to allow for leveraging the dual processing capability before sending the mixed 48kHz stereo audio signal from the FPGA to the codec for output. On top of this, there is a stereo input as well as a mono mic input made accessible, so these can all be patched into the 16 channel audio loop as a designer would desire. Furthermore, there is hardware midi input and out/thru via 3.5mm jacks and USB.

The FPGA is pretty valuable as it allows for single sample latency DSP in most applications. It’s also controllable via I2C from the Pi and can be reprogrammed via the pi GPIO, so different FPGA designs can be loaded depending on a designer’s needs.

As an Audio enthusiast and hardware Eng professional, I love the Zynthian project.

I’m wondering if there would be any interest in integrating this with the Zynthian ecosystem as an added platform option for Zynthian? Not that a Pi is anything to sneeze at processing wise, but I can see this augmenting capabilities that’d place it well above $1k+ synthesizers and audio devices.

On the note of integration - I currently have an ALSA compatible kernel module made, device tree overlay, as well as a userspace application for managing data pipelining and communications between the Pi and the FPGA. I typically reserve a CPU core just for this purpose using ISCOLCPUS. Communication between this and other applications is facilitated between a very rudimentary and simple local server similar to how JACK works, though with some simplification. If it was considered interesting to folks here, then I would think that the easiest way to integrate would be to integrate this as an alternative to JACK/pulseaudio/etc.

I just got the latest spin of these boards in and am working on bringup as well as getting some good videos made to showcase the system. We’re looking to sell them for ~$200 a hat, so it’s not particularly cheap but I think that the combo of comparing against other audio hats that don’t really offer as much flexibility as well as the ability to treat hardware in a similar way to software VSTs with the addition of the reconfigurable FPGA, this is actually a fairly inexpensive addition. Making something like a Zynthian targeted FPiGA Kit would be something I’d be open to work with the official team here on as well if there was ever interest. Would be an interest pairing to see how much improvement could be gained in latency for this system through parallel compute platforms joining hands. Latency aside, I think there are things that could be done with a system like this that just couldn’t be done with a traditional Pi.

More details will be available here. Sorry in advance because it’s not quite mobile friendly yet -

https://radical-computer-technologies.github.io/Radical-Computer-Technologies/f-pi-ga-audio-hat-v1.html

Just polling for thoughts and feedback! I’ll post videos up as soon as we get some of the hard bringup work done.

Hope all is well with everyone!