Hi Zynthianiacs,

this is something I’d like to do over the last year - and now I had some time to build my small DSO (a Bitscope-Micro with a 7" HDMI touch and a RPi2):

I created a simple test-adapter for MIDI and audio…

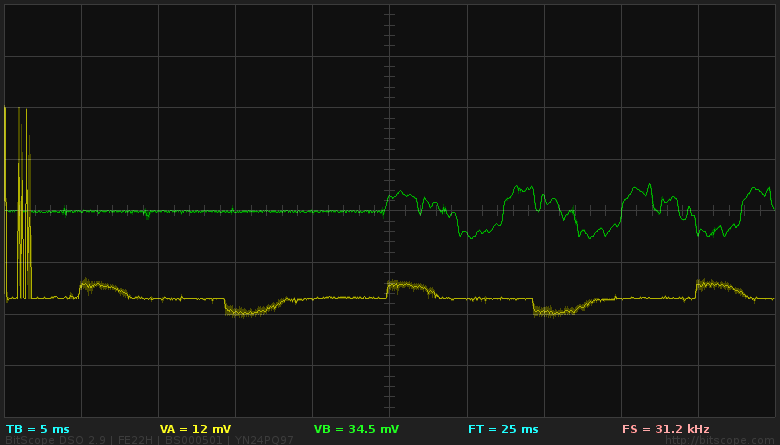

… and tested MIDI->audio latency of the standard Zynthian:

Here are the results. First I used the normal setup for the audio interface: 44.1kHz and 256 bytes of buffer. I tried several engines:

Pianoteq:

Linuxsampler with Synth/PadSynth:

MOD-UI with Dexed/FM-Piano

Pianoteq and MOD-UI have a latency of 16ms. Linuxsampler has a little bit more, about 24ms.

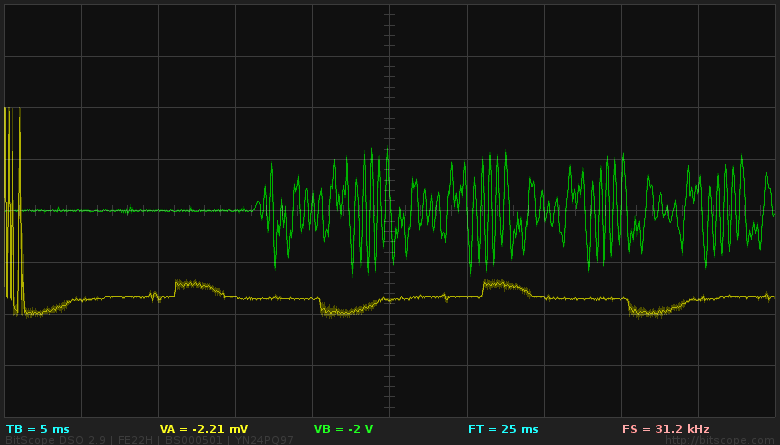

Now I restarted with a setup of 44.1kHz and a buffer size of 128:

Pianoteq:

Linuxsampler:

MOD-UI:

Hmmm… different picture: Pianoteq about 15ms, Linuxsampler 12ms, MOD-UI 11ms…

I think I will try again the next days and see if I can find out if there were measurement problems or if this result is reproducable.

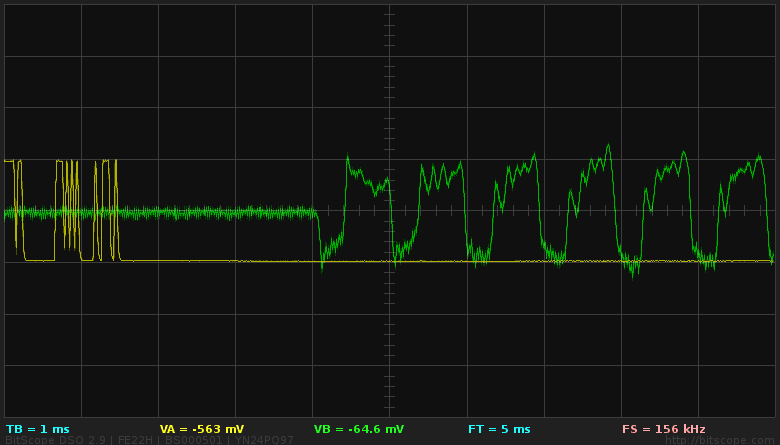

Just for comparision: The follwing measurements are made with my 30 years old Roland-D110. Remark: the time-base is changed from 5ms to 1ms, so the latency is about 4ms!!!

Regards, Holger

3 Likes

Hi Holger!

Interesting results. In theory, all engines working under Jackd should have the same latency when using the same parameters… but real life is always more funny than theory

Regards,

Not if the engine has latency - like Pianoteq. It depends on the algorithm. But my results are strange. IMHO Linuxsampler has no latency, but Pianoteq has. So Pianoteq has to have the higher latency and not Linuxsampler… strange. I will take a deeper look at this the next days.

Regards, Holger

Waking this thread for a small remark.

I used to do similar measurements way back when - recording the raw midi signal and audio from various synths - and I believe the latency should be measured from where the midi signal ends to where the the audio starts. This because any system will always have to recieve the full midi message before anything can be computed and sent to audio out. So with midi there’s an inevitableapproximate latency of almost 1 ms for every single note played always (unless the ancient “running status” function of midi is used in which case it might be a bit lower for intermediate notes). This is independent of the latency of the recieving unit.

Hm, yes, you can look at it that way. However, the time for a MIDI message is identical for all synths, since the bit rate does not change. But if you compare with an acoustic instrument, you have to take into account sending and processing of the MIDI signal. And actually you need the latency IMHO mainly to judge the playability for humans.

I once had to do with a project that organized distributed concerts (soundjack). Latency is the most important thing, because the normal musician can handle up to (IMHO) 20ms, but after that it gets difficult. Therefore, for connections in Germany we used a backbone with few hops and a lot of bandwidth (and above all little load). In Berlin (were I was the network and sound engineer) was the pianist, drums, sax and bass/guitar were in Luebeck and trombone in Paris. The pianist was a professional musician and said that despite a latency of 15ms (one-way, both-way then double) it was extremely difficult to stay in rhythm. The whole thing then starts to “swing”.

Therefore I think that for usable comparisons we have to use the complete time (i.e. positive edge of the first bit of the MIDI message) until the first rise of the audio signal as latency measurement - at least if we have to judge the playability for humans.

Regards, Holger